Europe’s hottest AI unicorn released its flagship model: its performance is almost equal to GPT-4, and Microsoft announced cooperative investment.

Original cheng Qian zhi dong Xi

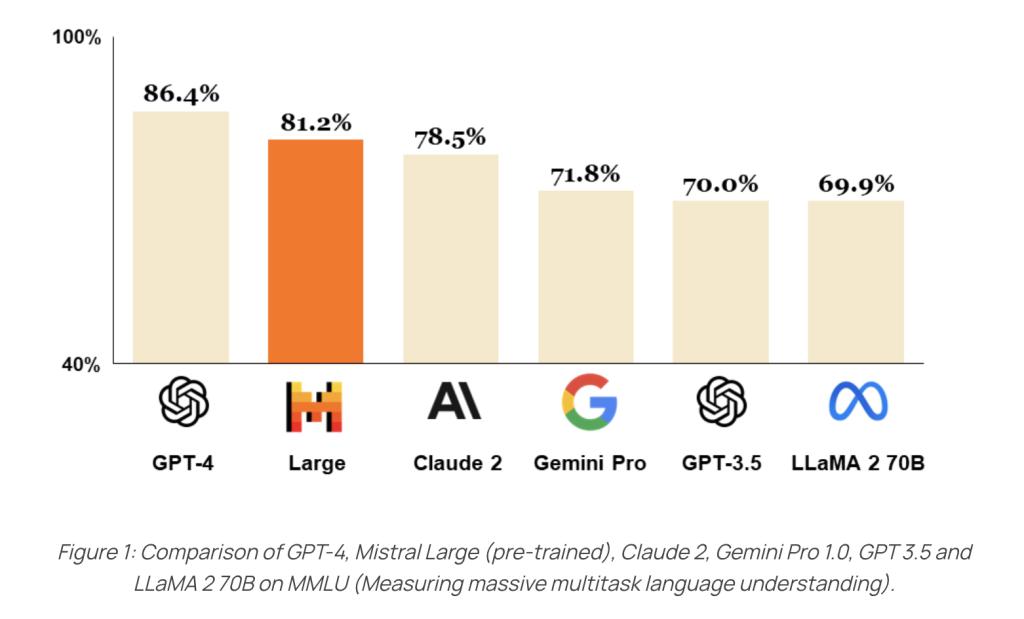

In MMLU benchmark evaluation, Mistral Large has become a model that is second only to GPT-4 and can be widely used through API.

Compile | Cheng Qian

Edit | Heart Edge

On February 27th, yesterday, Mistral AI, a European generative AI unicorn, released the latest flagship big language model Mistral Large. According to reports, unlike Mistral AI’s previous model, Mistral Large will not open source.

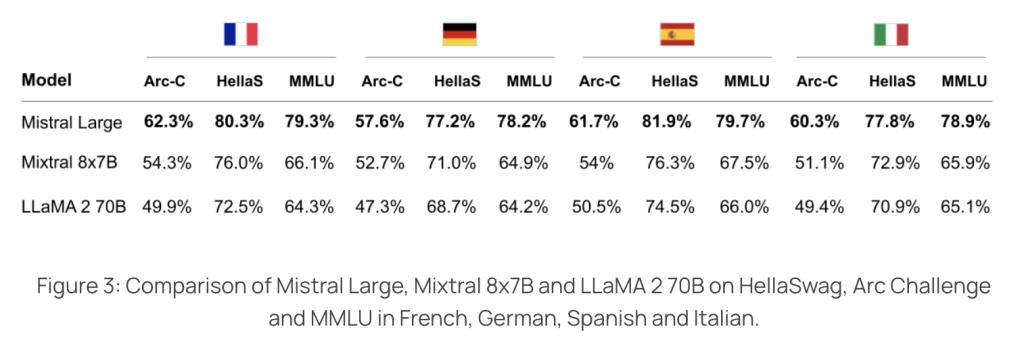

The context window of the model is 32K tokens, which supports English, French, Spanish, German and Italian. Mistral AI has not published its specific parameter scale.

Mistral AI showed a number of benchmark transcripts. Among them, Mistral Large is second only to GPT-4 in measuring the task benchmark MMLU of multilingual understanding. In the benchmark evaluation and comparison of multilingual ability, Mistral Large’s performance is better than Llama 2 with 70B parameter scale. Previously, Mistral AI successfully challenged Llama 2 with a 7 billion parameter model Mistral-7B.

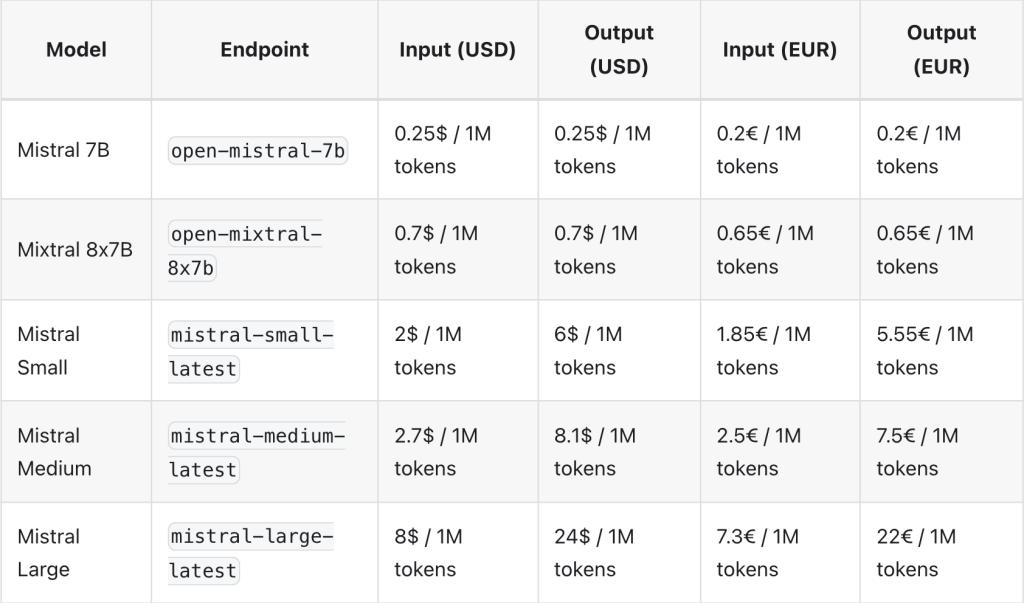

In terms of pricing, Mistral Large’s pricing is slightly lower than that of GPT-4 Turbo. Mistral Large is 0.008 USD /1000tokens for input, 0.01 USD /1000tokens for GPT-4 Turbo, 0.024 USD /1000tokens for output, and 0.03 USD /1000tokens for GPT-4 Turbo.

At the same time, on the day of the release of the new model of Mistral AI, Microsoft officially announced that it had reached a multi-year partnership with Mistral AI, and Microsoft also made a small investment in Mistral, but did not hold any equity in the company.

This cooperation enables Mistral AI to provide its large model on Microsoft’s Azure cloud computing platform, and this company has become the second company to host a large model on this platform after OpenAI.

01.

Show four new functions

Multilingual proficiency test is better than Llama 2.

According to the official blog, Mistral Large has four new functions and advantages:

First of all, Mistral Large supports English, French, Spanish, German and Italian, and has a deeper understanding of the corresponding grammar and cultural background.

Secondly, the model supports 32K tokens context window.

Thirdly, Mistral Large enables developers to design their audit strategies based on precise instruction compliance, and Mistral uses this instruction to set up the system-level audit of conversation assistant le Chat.

Finally, the model itself can make function calls. This is in line with the constrained output mode implemented on la Plateforme platform, which can realize large-scale application development and modernization of technology stack.

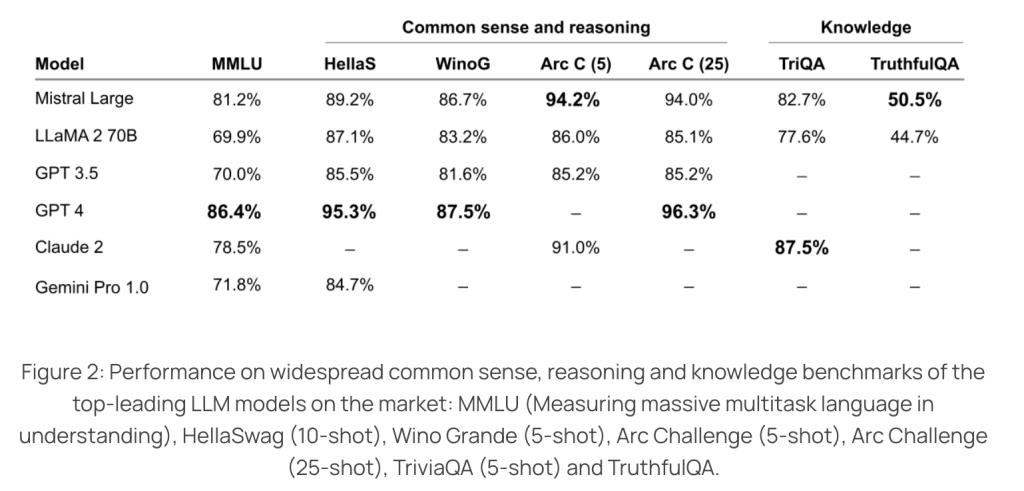

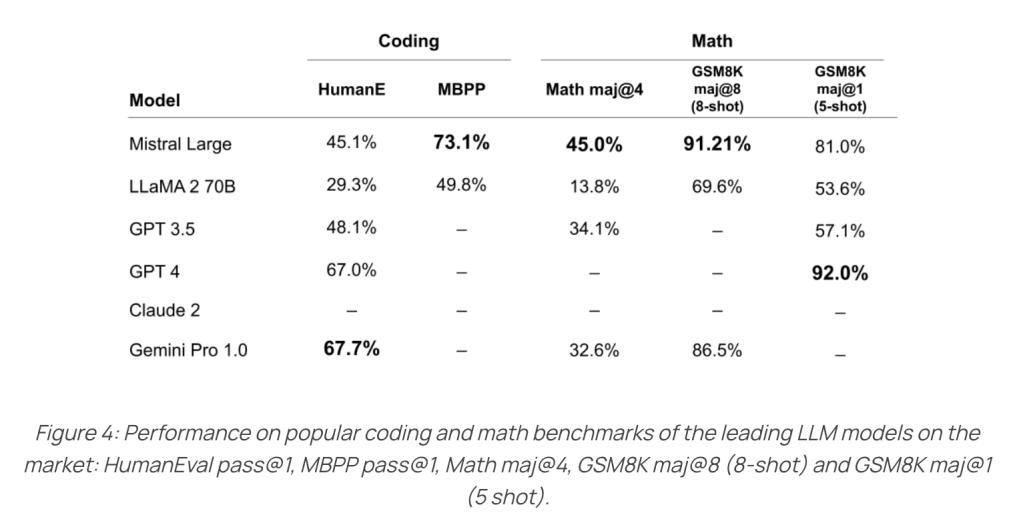

Mistral AI published the comparison between Mistral Large and other big language models in several benchmark evaluation tasks:

Among them, Mistral Large performs better than Claude 2, Gemini Pro 1.0, GPT-3.5 and LLaMA 2 70B in measuring the task benchmark MMLU, and becomes a model that is second only to GPT-4 and can be widely used through API.

Comparison of benchmarking between reasoning and knowledge ability;

Comparison of benchmark assessment of multilingual competence;

Mistral Large outperforms LLMA 270B in benchmark tests in French, German, Spanish and Italian.

Comparison of mathematics and code ability evaluation;

In addition, Mistral AI also released a new dialogue assistant, le Chat, which can be used as a dialogue portal for users to interact with all models of Mistral AI. The enterprise-oriented assistant is le Chat Enterprise, which can improve the productivity of the team through self-deployment function and fine-grained audit mechanism.

Le Chat is not connected to the Internet, so the official blog also mentioned that the assistant may use outdated information to answer. At present, users can wait to use le Chat by joining the waiting list.

Le Chat Experience Address: https://chat.mistral.ai/chat

02.

Release the new Mistral Small

Optimize response delay, cost

In addition to releasing the new flagship model of Mistral Large, Mistral AI also optimized Mistral Small for delay and cost.

Mistral Small has better performance than Mixtral 8x7B and lower latency, which makes it an "exquisite" intermediate solution between the company’s open source heavyweight products and flagship models.

Currently, the company is simplifying its endpoint products to provide the following:

Open weight endpoints with competitive pricing, including open-mistral-7B and open-mixtral-8x7b.

New optimization model endpoints, covering mistral-small-2402 and mistral-large-2402.

For developers, mistral-small and mistral-large support function call and JSON format, in which JSON format mode forces large language models to output effective JSON, which enables developers to interact with models more naturally and extract information in a structured format for easy use in the rest of their pipeline.

Function call enables developers to connect Mistral AI endpoints with a set of their own tools, thus achieving more complex interaction with internal code, API or database.

Mistral AI’s blog revealed that the company will soon add formats to all endpoints and enable finer-grained format definitions.

03.

Reach a long-term cooperative relationship with Microsoft

Carry out cooperation around three key points

Yesterday, Microsoft announced a multi-year partnership with Mistral AI. Therefore, Mistral AI’s model can be obtained in three ways at present. In addition to La Plateforme built on Mistral AI’s own infrastructure and private enterprise deployment, it can also be used on Microsoft Cloud.

Microsoft’s official blog mentioned that the cooperation between Microsoft and Mistral AI mainly focuses on supercomputing infrastructure, market development for Mistral AI’s model and AI research and development.

First of all, in terms of supercomputing infrastructure, Microsoft will support Mistral AI through Azure AI supercomputing infrastructure and support AI training and reasoning of its flagship model.

Secondly, Microsoft and Mistral AI will provide customers with advanced models of Mistral AI through Azure AI Studio and Model as a Service (MaaS) in the Azure machine learning model catalog.

Finally, the two companies will also explore cooperation around training specific purpose models for specific customers such as the European public sector.

04.

Conclusion: the commercialization of Mistral AI is accelerating.

Previously, Mistral AI defeated Llama 2, an open source big language model with tens of billions of parameters, with its innovative technical route.

Mistral AI’s model is usually open source, but the closed source of this model and the long-term cooperation with Microsoft may mean that this French startup can explore more business opportunities.

(This article is the original content of the signing account of Netease News Netease Special Content Incentive Plan [Zhizhi]. It is forbidden to reprint it at will without the authorization of the account. )

Original title: "The hottest AI unicorn in Europe released its flagship model! Performance is almost equal to GPT-4, and Microsoft announced cooperative investment.

Read the original text